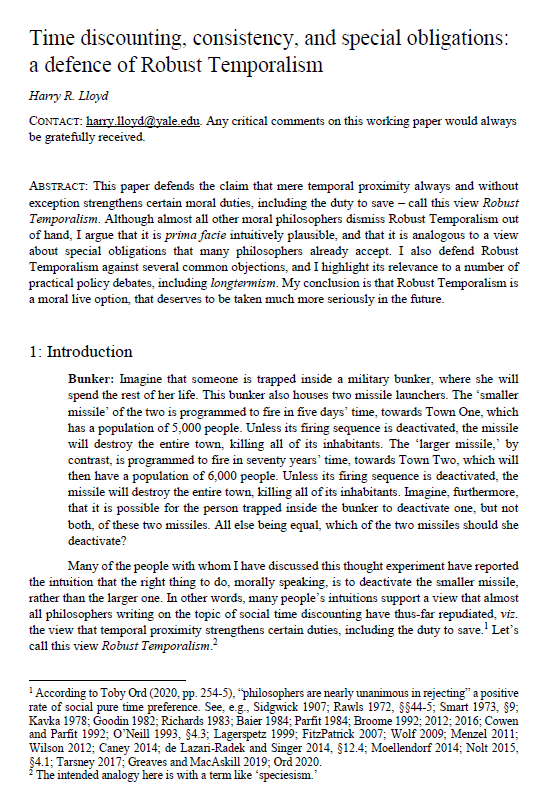

Time discounting, consistency and special obligations: a defence of Robust Temporalism

Harry R. Lloyd (Yale University)

GPI Working Paper No. 11-2021

This is the winning entry of the Essay Prize for global priorities research 2021. The uploaded paper is the full, revised draft of the abridged paper submitted for the prize competition.

This paper defends the claim that mere temporal proximity always and without exception strengthens certain moral duties, including the duty to save – call this view Robust Temporalism. Although almost all other moral philosophers dismiss Robust Temporalism out of hand, I argue that it is prima facie intuitively plausible, and that it is analogous to a view about special obligations that many philosophers already accept. I also defend Robust Temporalism against several common objections, and I highlight its relevance to a number of practical policy debates, including longtermism. My conclusion is that Robust Temporalism is a moral live option, that deserves to be taken much more seriously in the future.

Other working papers

Respect for others’ risk attitudes and the long-run future – Andreas Mogensen (Global Priorities Institute, University of Oxford)

When our choice affects some other person and the outcome is unknown, it has been argued that we should defer to their risk attitude, if known, or else default to use of a risk avoidant risk function. This, in turn, has been claimed to require the use of a risk avoidant risk function when making decisions that primarily affect future people, and to decrease the desirability of efforts to prevent human extinction, owing to the significant risks associated with continued human survival. …

The structure of critical sets – Walter Bossert (University of Montreal), Susumu Cato (University of Tokyo) and Kohei Kamaga (Sophia University)

The purpose of this paper is to address some ambiguities and misunderstandings that appear in previous studies of population ethics. In particular, we examine the structure of intervals that are employed in assessing the value of adding people to an existing population. Our focus is on critical-band utilitarianism and critical-range utilitarianism, which are commonly-used population theories that employ intervals, and we show that some previously assumed equivalences are not true in general. The possible discrepancies can be…

Exceeding expectations: stochastic dominance as a general decision theory – Christian Tarsney (Global Priorities Institute, Oxford University)

The principle that rational agents should maximize expected utility or choiceworthiness is intuitively plausible in many ordinary cases of decision-making under uncertainty. But it is less plausible in cases of extreme, low-probability risk (like Pascal’s Mugging), and intolerably paradoxical in cases like the St. Petersburg and Pasadena games. In this paper I show that, under certain conditions, stochastic dominance reasoning can capture most of the plausible implications of expectational reasoning while avoiding most of its pitfalls…