David Thorstad | The scope of longtermism

DAVID THORSTAD: (00:03) Thank you very much. So everybody can see my screen now. I’d encourage you if you’re comfortable to follow along, not on my screen, but on your screen, and also, I’ve enabled comments on the Google Drive. So in good GPI tradition, if you have thoughts you’d like to share in the Google Drive, please feel free to leave me comments on this talk.

(00:23) Our point of departure today is-- Let me zoom in a little bit in case you're watching on my screen.

(00:29) Our point of departure today is a statement of what's often called axiological strong longtermism. And this is not quite the most recent statement from Hilary Greaves and Will McCaskill. It's the, as it were, the penultimate statement because I think this statement really brings out the issue I want to focus on today. So this is the claim that in a wide class of decision situations – focus on that bolded phrase wide class – the ex ante best thing to do is one of a small subset of options which are best for the long-run future, again, ex ante.

(00:59) So clarification, this is ex ante not ex post. In general, people think the ex ante version is where most of the controversy is at and it's axiological claim about goodness not yet a deontic claim about what you ought to do because I want to avoid the complications you get when talking about different deontic views.

(01:15) So the scope question for ASL is quite simply, when we focus on this claim in a wide class of decision situations, ASL is the correct description, how wide is that class? And here you get something of an odd polarization in views. So, many of our opponents – I don't know if anybody's written this down, but when you talk to people-- I think many people think class is empty or near empty, that ASL would just be a crazy thesis to hold even in the cases like contemporary philanthropy where we'd like it to hold.

(01:42) The other extreme is also occupied. So Owen Cotton-Barratt straights strong longtermism like this, the morally right thing to do is to make all decisions according to long-term effects. And he doesn't mean some, he doesn't mean many, he doesn't mean most, he doesn't mean nearly all, he really does mean all. This is how you should elect political candidates, decide what to teach in school, decide how to manage a tech workforce, decide what to write about in journalism, decide what to talk about at the dinner table, and so on.

(02:09) So both of the extremes are occupied here and the middle is actually pushed a little bit further than I'd like the middle to be. So most people at this institute are not going to go quite as far as Owen, but you are going to get quite frequently, the suggestion that when we look at cause specific decisions – so not, for example, public health versus existential risk but one public health intervention versus another – here too, it might be that longtermism governs the choice between these options. So I won't read you these passages, but let me just summarize them. So Hilary and Will, I think quite correctly don't full voice this suggestion. They say it might be the case that long-term suggests-- Consequences could reverse the preference between short-term consequences of 2D warming programs-- But they say in this example, they're only talking about the long-term on a scale of decades rather than centuries or millennia. But I think if you read other people, certainly Nick Beckstead in his dissertation, you'll get the suggestion that in choosing between competing public health programs, there too, it might be the long-term consequences rather than the short-term consequences that drive most of the expected value here.

(03:12) So my target is a more restricted scope for longtermism. Let me just view this as a single page. So I think it's quite right to say the scope of longtermism contra our opponent's is nonempty, and in particular, it probably contains the case we care about, namely, if you're a cause-neutral philanthropist today and you're trying to decide what to do, you could do far worse than funding a longtermist cause. But I do want to suggest that the scope of longtermism thins out a good degree beyond that.

(03:41) So the structure of the argument today is going to be roughly the following. I'm going to spell out the type of axiological strong longtermism I'm interested in. I'm only interested in one of two ways that ASL could be true. I'm going to argue that ASL is, excuse me, plausibly true in the case we really care about, namely, a present day cause-neutral philanthropist. But beyond that, I'm going to give two arguments for the rarity thesis. This is going to be roughly the idea that the options you need to bear out ASL in a given decision problem are rare in our lives as decision makers. Then I'll look back to the good case. I'll argue that nothing I've said about rarity threatens ASL in the cases we really care about. But on the other hand, I will use the rarity thesis to pose two limitations to the scope of ASL beyond this case.

(04:23) So first in preliminaries – I'm going to give you a toy model. I've taken I think, a pretty simple model from Hilary and Will's paper and made it about as simple as it possibly could be. So I hope this is complex enough to make the point. A couple of assumptions I'll need. I'll need to assume that value is temporally separable across time. And I'll need a reading of ex ante values as expected value. If you don't like that just read an 𝐸 as ex ante rather than expected and you'll have to read me as making some commitments about ex ante value. I've read value as relative to a baseline, so you can think of the baseline as the status quo or maybe the effects of inaction. I don't think strictly speaking I need this assumption but it would be really quite complicated to state all these claims without a baseline. So I'm going to do everything in a baseline relative manner.

(05:09) Notation-wise, you shall use 𝑉 for value as usual and split it up into its long-term and short-term components, 𝐿 and 𝑆. Notation won't be very complicated, so no parentheses here, just 𝑉ₒ as the value of 𝑜, 𝐿ₒ is the long-term value of 𝑜 and so on. And just write ∆ before a value operator if you want to relativize it to a baseline, so ∆𝑉ₒ is the value of 𝑜 minus the value of the baseline and same for ∆𝐿ₒ and ∆𝑆ₒ. Relative to this, so the toy model I've given you holds with or without the ∆s with or without the 𝐸s, but I want them all here. You're going to ask what's the expected impact of taking an option 𝑜 on total value relative to the baseline of doing nothing or the status quo? Well, it's the expected change in short-term value relative to baseline plus, by separability, the expected change in long-term value. So that's I think enough to state the distinction I'm interested in.

(06:08) So call an option a swamping longtermist option if, a bit informally, if long-term value is not only greater than its own short-term value – again, when I talk about value, I always mean expected value – but actually greater than any achievable short-term value or disvalue from another option. So this is an option whose expected long-term impact is greater, again, in expectation than the maximum expected short-term impact of any other option and we really do mean positive and negative impacts here.

(06:38) So with this terminology in mind, there are two very different ways you could get axiological strong longtermism. So the first would be what I'm calling swamping axiological strong longtermism. So start with ASL and a wide class of decision problems, the ex ante, the best thing to do is one of a small subset of things whose ex ante effects the long-term future and your best and that thing is also a swamping option. It also has long-term value that starts to swamp out. Short-term value here, you might think of somebody who thinks that, for example, existential risk reduction is a very important thing to do and the expected long-term value of existential risk reduction is not only greater than any expected short-term value or disvalue from this option but are, in fact, any expected short-term value or disvalue from any other option.

(07:21) On the other hand, you could have convergent ASL. This goes by other names, but I'm going to call it the convergence thesis which would be that ASL holds, but not in virtue of swamping. So convergent ASL happens when the best thing to do is near best for the long-term future but it's also at least not so bad for the short-term future. Here, I think you could do very well to think of Tyler Cowen who thinks one of the best things we can do for the short-term is to promote economic growth and one of the best things we can do for the long-term is to promote economic growth. So even if the long-term impacts don't swamp out the short-term impacts, you could still have convergence between long-term and short-term bestness. We should probably if we wanted to be careful, separate out cases between the swamping of the convergent case, but for today, I'm just going to look at the swamping case.

(08:05) So why am I interested in the swamping way for ASL to hold and not the convergent way? The reason I haven't written down is just assessing convergent ASL in full generality is really hard and so I don't know if I can do that today. But the reasons I have written down, first, swamping ASL is the most revisionary thesis. It tells you that what you might have thought the best thing to do is, namely, maximize short-term value, is often not the best thing to do.

(08:29) It's also, if you read arguments by longtermists, often the kind of longtermism that people had in mind tends to be swamping ASL. So people definitely talk about both of these, but I think this is in many ways paradigmatic.

(08:40) And also you need the swamping version to go from axiological to deontic ASL in full generality. So the way you argue here is you argue that ASL is not only true as an axiological matter, but in fact, the long-term considerations are quite important as an axiological matter, and then you say, of course duties to promote impartial value can trade off against deontological side constraints and personal prerogatives and like and you argue that, as it were, the axiological matters are so important that they swamp out deontic objections and that's how you get in the easiest way deontic ASL. But you won't be able to argue in that way unless you have something like swamping ASL in mind.

(09:18) So what I want to show here, I want to give you the rarity thesis. I want to argue that the vast majority of options that we confront in our lives as decision makers are not swamping longtermist options. So this is going to cause some problems for the scope of ASL.

(09:32) Now, I want to give you the good news first. So I think when we're talking about the decision of a present day cause-neutral philanthropist, someone who steps back and says I have $1 or $10. I could do anything with this. What do I want to do? ASL is probably a good description of this decision problem and we can argue in something like the following way. Here I think also, I'm following-- This isn't explicitly the argument that Hilary Greaves and Will MacAskill give in their paper but this is at least loosely inspired by their arguments. So let a strong swamping option be an option whose expected long-term impact not only swamps out in the way of exceeding any possible expected short-term impact but swamps it out by a lot, let's say by 10 times. And the argument for strong swamping is going to be a way to use this idea to show that in a given decision problem, say, oh I don't know, contemporary cause-neutral philanthropy, that swamping ASL is a correct description of this decision problem. And so the load-bearing premise is going to be in this decision problem like contemporary philanthropy, you're going to find an option and you claim it's a strong swamping option. So I'll talk about funding asteroid detection research. Then you'll introduce a, at least for the most part, a mathematical premise that if you can find a strong swamping option in the decision problem, you've got ASL on that decision problem. I've footnoted that because that's basically math, but we can talk about that. And so the conclusion you'll draw is that, in the name your target decision problem, say contemporary philanthropy, ASL is the correct description of that decision problem. So the load-bearing assumption here is the existence claim that there is a strong swamping option in a given decision problem.

(11:09) And you can argue for this in a given decision problem in something like the following way. So pick an option, oh like detecting near-Earth asteroids that could potentially destroy life on earth. You don't have to argue for this first premise, but it's going to make your life a lot easier if you're going to argue that we have a type of single track dominance, that is, the lion's share of the expected impact of this option on the long-term future is driven by a single causal pathway or effect. So detecting asteroids plausibly is going to have many different impacts on the long-term future, but most of the impact is going to be driven by the change or lack of change in the probability of extinction from asteroid impacts. Then with this premise in mind, you can only think about on-track effects as opposed to having to track down millions of different types of effects.

(11:54) So you can say focusing only on on-track effects, you've got a nontrivial probability of significant long-term benefit from taking this option, i.e., for some extremely large value of 𝑁 like the value of saving 8 billion lives, the probability of producing at least that much for a future benefit is nontrivial, say 1 in 100 million, again, considering only effects along the specified track, i.e., early warning of asteroid impacts.

(12:21) And of course, you also need to say that the probability of significant harm along this track, well, not perhaps zero or much smaller. So again with 𝑁, say, the value of 8 billion lives, the probability of causing long-term harms worse than that is much less than the probability of causing long-term benefits better than that. So these premises, if we argue for them in a given context, are usually going to be enough to get you the existence of a strong swamping option. There will always be the existence of a strong swamping option. You can always grab the very, very far tailed probability distribution and do strange things to it, but I claim that in general, it's not going to be helpful to think through these issues, except when it's clear that they could arise in context.

(13:02) So the remaining task here is to give you an example of why I think there are strong swamping options in the case of cause-neutral philanthropy and I'll give you an example from the past, actually, to illustrate the structure of the argument. And this is efforts to detect near-Earth objects, such as asteroids in the 1990s by the US government. So by way of background, NASA scientists classify asteroids by their diameter in terms of risk categories and the highest category, 1 kilometer or larger, is potentially catastrophic. It could destroy the Earth. Don't neglect risks of asteroid impacts. So we didn't used to think this, but now I think the consensus view is increasingly that what killed not only the dinosaurs during the Cretaceous period, but in fact, every single land dwelling mammal of any type above five kilograms, was in fact a catastrophic asteroid impact.

(13:53) And we shouldn't get too complacent about our ability to detect these things, either. So some of you might remember two years ago, there was an asteroid that came much closer to the Earth than the average distance of the moon. And what was surprising about this is we heard about it the day before it arrived. So there is some reason to worry here.

(14:10) And we do have from NASA, I think, a pretty reliable base probability of catastrophic impacts. By catastrophic impact, I mean, impact by an asteroid of 1 kilometer or greater in diameter. They put this as happening about once in every 6,000 centuries. So here's something I think is a pretty good candidate for a strong swamping option in the past. And then you'll have to make some argument with this structure in the future using your favorite intervention.

(14:26) So we funded the SpaceGuard survey, which among other things, mapped 95% of the asteroids and similar objects with diameters greater than 1 kilometer. And it cost us in the ‘90s, about $70 million. So you can do a couple of things. One way you can check this as a strong swamping option is run through the premises, but I think it might be more helpful today to give you some more specific numbers.

(14:58) So let me just survey, in general estimates, of how many humans there might be in the future. These tend to range from about 10¹³ on the small side, to about 10⁵⁵ on the large side, and the variation here is, for example, how far you think we'll expand beyond Earth and whether you're counting only biological humans or simulated humans or the like.

(15:19) And let me just stipulate a probability of one in a million that if we got early warning of a catastrophic asteroid impact, that would change us from surviving to not surviving. Maybe we wouldn't do so well, but at least we could prepare as a government or stockpile food or something like that. Then you ask, okay, on these numbers, what's the expected cost of saving a future life?

(15:37) And let's be really conservative. Let's say we're only getting early warning from this project of an impact within a century. The expected cost per far future life is going to be $7 on the absolute lowest estimate of future humanlike lives and on any more liberal estimate, it's going to be pennies on the dollar.

(15:53) So by way of comparison, we usually put the best expected short-term interventions at several thousand dollars per life saved. So plausibly, here, we do have a case where expected long-term value is swamping out best case expected short-term value. That's the good news.

(16:10) I do think when we get beyond this case of contemporary cause-neutral philanthropy and also beyond adjacent decisions like becoming a Global Priorities researcher or something, we get two types of pressure against theses like ASL. So the rarity thesis, again, is going to say we don't get swamping longtermist options very often. And we need to make two complementary arguments for that because neither of these arguments is going to do it on its own. The first is what I'm calling the phenomenon of rapid diminution. So if you want to think in terms of probability distributions, this is just 'thin tails'. And the idea is just consider all the possible long-term impacts an action could make on the long-term future. Let them be an event partition with some bumpiness.

(16:52) In most cases, as you get larger and larger impacts, the probability of making these impacts gets smaller and smaller. So it's generally erased if probabilities are decreasing, but not as quickly as sizes are increasing, you get quite a large contribution in expectation from far future benefits.

(17:09) But on the other hand, if you have what I've called rapid diminution, namely, the probabilities are just going down much faster than the impacts are going up, then you can get a quite modest impact, potentially, of far future large benefits.

(17:23) So an example of rapid diminution – and again there are many other ways to get this phenomenon – would be a normal probability distribution. If you have an intervention that, for example, saves a mean of 10 lives and has a standard deviation of 20 lives saved, then by the time you're, say, 10 standard deviations out, you've got almost everything that matters for the expected value of this intervention, but you're still only at the value of saving 210 lives not 8 billion.

(17:47) And so the question is, why should we often, again not always but often, expect this kind of rapid diminution to happen? And the case here is for persistent skepticism. So the thought here is that just many of the options available to us as decision makers don't make persisting impacts on the long-term future. Again, this is supposed to be a substantive empirical premise that needs an argument. It probably needs more argument than I can give you here, but let me give you some sketch motivations for this view.

(18:13) So we do have now a burgeoning academic field called persistent studies that tries to study the persistence of historical phenomena and their effects into the present day. And something to note about this field is we often get failures of persistence, where we have options that looked like they were going to make a very big impact on the shape of the long-term future, but it turned out they didn't.

(18:34) So you might think about wartime bombing. The American bombing during World War II of Japan was quite heavy or during the Vietnam War of Vietnam and you might think as awful and catastrophic as this bombing was in the short-term, it would be even worse in the long-term, that you would see just steady migration out of the hardest hit cities, persistent economic depression, increases in crime rates, difficulty with civic services and the like. But it turns out in both cases, and this wasn't presumably a fluke, that's not what we find. That is, several decades later, you look at the effects of bombing between heavy hit and less heavily hit cities, on population sizes or poverty rates or consumption patterns or any plausible economic indicator, and you don't have significant effects. So here you have things that you expected to make long-term persisting impacts and it turns out the world bounced back.

(19:25) Now, of course, this kind of pessimism about persistence needs to be tempered by some optimism about persistence. So here are some effects, at least two of which I think I do believe were genuine persisting long-term effects of the shape claims.

(19:38) So it's been claimed that the introduction of the plough led to a rapid increase in the gender division of labor because it was just much harder for women to farm with the plough. It's been claimed quite plausibly African slave trade led today to reductions in social trust in the hardest hit regions. Now they don't trust anybody. And of course, economic depression. If you take away the labor force for a country there's going to be a long lasting economic impact there. And it's also been claimed that the quote unquote-- I'm sorry, I forgot to cite this, but it would have been known as the WEIRD personality traits in psychology, western, educated, industrialized and so on, might have been spread by the development of the Catholic Church.

(20:19) So these are important findings, but we have to take them with a couple of grains of salt. So first, remember, these findings are controversial. It's quite a new field and people go back and forth on whether or not they believe them. That's one small grain of salt.

(20:33) Second grain of salt, we don't have so many causative findings here. The rarity thesis is going to claim that persistent long-term impacts – Not that they never happen, but they don't often happen. So we would need to do some number counting here.

(20:46) But I think the most important thing to focus on is, none of these cases is plausibly a swamping longtermist option. It's true that these are cases where short-term actions had very large long-term impacts. So the African slave trade reduced social trust, but they also had at least comparably large short-term impacts. The African slave trade sold millions of people into slavery. So we don't yet have great evidence coming out of this literature for a large number of things that could be plausibly swamping longtermist options.

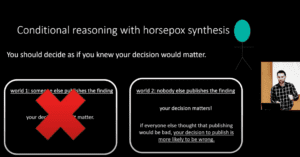

(21:16) That being said, we can't only lean on persistent skepticism. The argument can't be that it's just never the case that you're going to do something and you think the main impact of this thing is going to be its impact on the long-term future rather than the short-term future because we can think of examples where that's not plausible. So my favorite example is the Roman sacking of Carthage was plausibly, even at the time, something they could expect to be a momentous event, not only for the short-term, but the long-term. They knew very well, it was going to shift permanently the international balance of power, that it was going to kickstart the Roman Empire and this was going to have, even if they didn't consider them, quite massive effects on the shape of Western culture lasting for thousands of years.

(21:57) So here, the argument is not that you should be skeptical about the persistence of large long-term impacts, but rather, you should be skeptical about our ability to forecast the magnitude and directionality of these forecasts. So if you were a Roman general or even somebody today and you ask yourself, I think the sacking of Carthage probably had an impact on the future, what was the size and directionality of that impact? Here, I think it's much harder to give a plausible answer.

(22:23) So this is going to take us into our second argument for the rarity thesis and again, you have to make both of these arguments because neither of these arguments on its own is going to take you where you need. This is what's sometimes called the argument for washing out. It's not quite the way you might have seen the argument, but I do think it's the same argument. And if you're thinking in terms of probability distribution, just think about significant amounts of symmetry about the origin.

(22:46) So a bit more informally, I didn't give you any math here, washing out occurs to the degree that a potential positive and negative impact of an action on the long-term future will cancel in taking expectations. And again, this is going to happen if your probability distribution over ∆𝐿ₒ is pretty symmetric about the origin. And there may be two ways you might get yourself into thinking that we would often have washing out, again, often not always. We'll need to talk about when this happens.

(23:15) The first way you would get yourself in the mood for this was thinking about the paucity of our evidence. So start with a popular Bayesian idea that total ignorance about, let's say, the long-term value of an option should be represented by a symmetric prior. I'm not saying which prior. It could be uniform, it could be normal, it could be log normal. Take your favorite. But if you're totally ignorant about the long-term value of an option, you should put a symmetric prior about the origin over its long-term value changes. And then the weight-bearing premise here is evidential paucity that we often just don't have very much evidence about the long-term effects of our actions. So if you put these two together, then of course, as an intermediate conclusion, you get that our posterior should show a strong effect to the prior because we just don't have that much evidence.

(23:57) And in particular, if the priors were largely symmetric, the posterior should not be entirely symmetric, but largely symmetric. So this is one reason you would expect to have a lot of cancellation. It's just because we don't have enough evidence to undo the cancellation we had in the prior.

(24:11) There's another way to make this argument, and I'm not sure if this was the right decision or not, I hid the math here because it occurs to me there are about 20 different ways to make this precise. So we can talk about how you'd like to make this argument precise. But I think this is actually the same phenomenon.

(24:28) I'll try to talk about why, namely, forecasting pessimism-- So this is the idea we talked about with the case of Carthage, that even if you're often sure that your impacts on the long-term future are going to be large, it's often pretty difficult to forecast what these impacts are going to be. So that's the load-bearing premise is forecasting pessimism.

(24:48) Then I'll give you-- I go back and forth between calling this a mathematical and a normative premise because it's mostly mathematical, but of course, there's normative theory embedded in any kind of math you want to use here-- Is the idea that if you're quite uncertain about your forecasts and if you have mostly scattered forecasts but a couple of worked forecasts saying you have a very large expected long-term value or a couple of forecasts saying you have a very small long-term value, that that should lead you to discount these forecasts down a little bit.

(25:16) And one way to see this that I think makes the link to washing out pretty nice, maybe you like ASIF discounting or a different presentation, but one reason I like to talk about this as a type of washing out is, you can think of it as a type of cancellation between potential optimistic and pessimistic forecasts we could have made under different circumstances. So you might say something like this. We have very sparse evidence, that's evidential paucity, so we know that nature could have easily dealt us a very different hand of evidence. So then you say, I made a forecast based on the evidence I had, but if nature dealt me different evidence, I know I would have made a very different forecast. So you need to expect that out over the possible positive and negative forecast you could have made. And you can pretty easily talk yourself into the thought that maybe a lot of the directionality and magnitude of the forecast is not anything about nature, it's just some noisiness and the evidential signal that nature fired to you. So that's going to get you some kind of a washing out between now possible forecasts. And so that's one way to get yourself in the mood of discounting large forecasts based on forecasting uncertainty. If you like another way of doing it, that's fine, it's just it's not going to sound like washing out. So the load-bearing premise here, of course, is the thought that we should be pretty pessimistic in many domains, not all domains but many, about our abilities to forecast a long-term future.

(26:34) Why is this? We could argue about this all day and I think to some extent, really, I'd like to talk more about this in Q&A because this kind of an argument is where the action's at. But three things you might be concerned about.

(26:46) First is track records. So we sent out a research assistant and we said, find for us all the good meta analyses of long-term predictions at a timescale of 20 or 30 years. And they said, we've got a couple of dozen technology forecasts, political forecasts, forecasts like these. Not too many meta analyses. We do have a couple dozen, some of them are great, most of them are okay and some of them are quite poor. Then we ask, do we have any good track records of making predictions at a hundred or more years? And we actually couldn't, outside of some very rarefied scientific domains, find any credible meta analyses. So I just don't have anything to cite at timescales. So then you ask, why should we believe or expect to be especially good at forecasting, on a timescale of a thousand years or a million years or 10 million years given that we have such limited indexed track records might put some pause here.

(27:37) The second worry, of course, you have as practitioner skepticism. So there are many economists in the room I see, and in general, when you ask relevant stakeholders in these contexts – economists or risk analysts or futurists or the like – will you predict, for example, the effects of your actions on China's GDP in 374 years? Sometimes they'll make that prediction and I think one of the lessons of effective altruism work is maybe we do need to be a little bit more comfortable with making such predictions. But very often, they'll tell you to get lost. They'll refuse to make a prediction like that. They'll say, we just don't have enough evidence to do it. And some stakeholders, in particular the risk analysts, when you ask them to make these predictions, they have to make them, but they give you non-forecasting models. They give you other ways of dealing with future uncertainty than predicting it. So I think we have some uncertainty on behalf of key stakeholders about the makeability of these predictions.

(28:30) And also remember value is multidimensional. So when we look at track records, we look at track records of predicting very specific quantities, like how many malaria deaths will you avert by installing bed nets, but when we talk about far-future value, that's quite a multidimensional phenomenon. It's determined by public health outcomes like malaria, impacts on government funding for public health, impacts on poverty rates, impacts on regional stability on international power. And so predicting all of these phenomena at once is, as it were, much harder than predicting the things we generally have track records of predicting.

(29:04) So summing up the rarity thesis, again, is going to be the view that swamping longtermist options, the options whose expected long-term value is just much greater or at least in the swamping case, greater than the best achievable expected short-term values, are relatively rare. And we made two arguments that have to be made in tandem here.

(29:23) So we had the rapid diminution idea that many options are unlikely to have large far-future impacts because the probabilities dip too quickly. And washing out we said, even if they do have potential large future impacts, they could also have potential large future negative impacts and we might often expect significant cancellation here.

(29:44) Now the good news, and this is the reason I don't call myself a skeptic about longtermism, is I think absolutely nothing I've said in this paper should touch the argument for longtermism in a case like contemporary cause-neutral philanthropy. And you can check this in two ways.

(29:59) So one way you can check this is just to check the arguments-- the premises of the argument for strong swamping and to show that these premises are enough to get you out of the worries and in fact that some of these worries, say, like rapid diminution help with some of the premises, say, nontrivial probability of significant harms.

(30:18) But I found it can be a little more impactful to just go through the concrete case I presented and invite you to make analogies to your favorite other case. So I claim, at least in the past, that asteroid detection, not deflection but detection was a pretty good example of a swamping longtermist option. And let's just show how it avoids all these worries.

(30:37) So the first worry was persistent skepticism. I said, tell me how we can make an impact on the long-term future that will last for a while? Not being dead is a status that if you're not dead today, you're not dead tomorrow, you're not dead in two days, as long as you believe we're not such murderous savages, we're going to kill ourselves. Again, there's really no worry about persistence of extinction or non-extinction as a status.

(30:59) And likewise, forecasting. So I said, look, you should be really skeptical of our ability to forecast the long-term future and first I said, we just have terrible track records, but then I also said, except in some scientific domains, the key paradigm, of course, being astronomy. Even the Mayans could predict astronomical impacts on, you know, 100 years, 200 years, so there's no worry about track records here.

(31:19) And there's no worry about track records from practitioners. I said practitioners aren't ready to make these forecasts. But actually, the forecast I gave you was from NASA scientists and if you look at the impact damages and all the calculations from those, those are coming from NASA. So I can't say practitioners aren't ready to make these forecasts.

(31:34) And likewise, the multidimensionality of values is an issue here. It's definitely true that the value of the long-term future is a multifaceted matter and it's made up of many components, but at the same time, I don't think-- Obviously, some people are skeptical the long-term future will be good rather than bad, but I think most people listening to this talk will probably have some sympathy for the idea that it would be better for humans to continue to exist than not continue to exist. And really all that sympathy is—that sympathy is all you need to get on board with the idea that preventing extinction risk could be a good thing. So it's not like the case of investing in public health where you're worried about destabilizing governments and other like worries. If you're an anti-natalist, I apologize, but I think many readers will be more sympathetic to the value claims I'm making here. So that's a good case.

(32:24) But I do think once we move outside the good case, we get some worries for the scope of ASL. And the first is what I'm calling the area challenge, that if we look at decision makers who are restricting their actions intentionally to specific cause areas, either fully philanthropic decisions or not philanthropic decisions, the argument might get a little bit less good.

(32:44) So if you return to the choice between I can fund Program A or Program B to deworm vulnerable populations, you wouldn't I think-- Nobody would want to suggest you could run anything like the argument of single track dominance. So certainly we don't have the simplifying premise that most of the long-term effects run along a single causal pathway. I think what the longtermist wants to suggest here is precisely the opposite. There are just very many different ways in which something like deworming could go on to affect the long-term future.

(33:12) Again, removing the rider about single track dominance, if the longtermist would be able to get the second premise that deworming has a nontrivial probability providing very significant far-future benefit. Maybe you think deworming an area that's going to be a hub for future technological innovation and speed the pace of spaceflight and the like.

(33:34) But it's hard to see how you would get this claim without denying the next claim that there is a much probability of significant far-future harm because you could also, for example, deworm the wrong area and not support future spaceflight. Or you could just as easily save the life of somebody who's going to be bad for the long-term future or someone who's going to be good for the long-term future. So we're not going to be able to get anything as clean as the argument we ran in the good case. Of course, that's fine. That doesn't yet say that there's anything wrong with being a longtermist in this case.

(34:04) But once we start losing the premises of that argument, we start to get a little more bite in the arguments from forecasting skepticism and persistent skepticism that I made earlier.

(34:13) So as far as with track records, I think most of us should admit that we just don't have any track record whatsoever of predicting, for the most part, public health outcomes, but certainly impacts on overall value from public health interventions on a timescale of centuries or millennia. This isn't something we've done before.

(34:32) And as far as practitioner skepticism, even GiveWell doesn't try to do this. So the GiveWell foundation funnels every year, millions of dollars into causes like deworming and malaria and they write up hundreds of pages about the estimated impact of these interventions and they're quite sympathetic to longtermism. So you'd think that if anybody thought it were fairly feasible to be making forecasts of long-term impact in these kinds of cases, GiveWell would be doing it. But even GiveWell isn't doing it yet. And I think that isn't, of course, decisive evidence, but maybe that should give us some pause here.

(35:05) And likewise, I realize that people go back and forth on the Deaton stuff. But I take public health to be a pretty paradigmatic case of the multidimensionality of value where people really think it's a far step from the argument that you would, for example, directly save a life from malaria or from worms to the argument that you provide a net benefit to society or to the world just because there are so many other different things that could be going on here. So that's forecasting pessimism.

(35:32) Persistent skepticism, I honestly have nothing interesting to say here. I think just what should be said here is it's hard to see how you could overcome persistent skepticism in this case, by pointing out the specific features of this case. It seems like you'd have to push more generally against the motivations for persistent skepticism. That's the first challenge.

(35:53) I think we're doing okay on time. I'll try to wrap up Hilary, definitely with at least 10 minutes for questions and I think I can get us 12 or so.

(36:03) So the second challenge to the scope of longtermism is going to deal with options on awareness. So as a motivating example, imagine you're being chased down an alleyway by masked assailants and the question I ask you is, you're at the end of the alley, should you turn right or should you turn left or should you stop and fight? Actually, it's a trick question. I forgot to mention that you're currently passing a weak ventilation pipe, that if you open the pipe you could spray your attackers with hot steam and that would be even better. And I assume that you know enough physics that you could infer this with high probability. You see the pipe is weak. You see the steam. You can see all the individual fields but you haven't even considered doing any of it.

(36:43) So we can parse this decision problem in two different ways.

(36:47) We can take an awareness unconstrained reading as it is and we can say here are your options. You can turn right, you can turn left, you can fight or you can break the pipe. And as soon as we do this, we're committed to saying the action-- the agent ought to break the pipe and the agent would act wrongly and irrationally by doing anything else than breaking the pipe because, of course, this is much better than any of the other options.

(37:07) Many people would have wanted to push towards a more awareness constrained reading of your option set. They'll say if you happen to be a super spy and you've actually noticed the pipe, that's your decision problem, but otherwise, it might be that your options are turning left turning right and fighting. And this will, I think, plausibly get you the verdict, that what you ought to do is either to turn right or turn left and you wouldn't act wrongly by failing to break the pipe.

(37:28) So why would you like awareness constrained readings of rational choice? Probably many of you have your favorite arguments, but if you don't, here is, I think, the most modest argument I can give you. You might think there's something like the God's eye perspective. Let's call this the ex post perspective from which every option physically or metaphysically possible to you should be counted as part of your decision problem, but many people-- I think Brian Hedden said something like this, I think the ex ante perspective is supposed to capture agent's limitations, in particular, it captures your limited information about the world. It would be a little odd to not try to capture your limited information or awareness of the options available to you, so you might think at least ex ante choice should try to capture option unawareness.

(38:09) Now notice that option unawareness is going to do nothing, I hope this is a recurring theme here, to tell against ASL in the good case of philanthropy. And it's going to do nothing for two reasons.

(38:18) So the first is, we already know plenty of swamping long-term options or at least think we do. I gave you one example and you probably have others, so there's no awareness problem here.

(38:28) And the second-- I feel like I should be citing the Greaves and MacAskill paper more because the second option is also coming from them. If we weren't aware of any swamping longtermist options, here's something we could do. We could fund research until we found a swamping longtermist option and that research itself could be a swamping longtermist option.

(38:48) So, so far, so good. I'm not trying to refute ASL in that case. But when we move much beyond that case, unawareness is going to pose a problem for the scope of ASL. And the problem is a counting argument that goes something like this. So by the rarity claim, most of the options we face aren't swamping longtermist options. That's just what rarity says. And by option unawareness on most theories, you're going to model agents as facing decision problems with maybe 5 or 10 or a couple of dozen options. And if most of those options aren't swamping longtermist options, again it depends how strong 'most' is here, but if by 'most' you mean a pretty strong 'most', you're just going to get a counting inference that most of the choice sets we face don't have any swamping longtermist options either and so you won't get ASL on those problems.

(39:34) Maybe as an example, you can think of global health. So, imagine two different ways of posing deworming problems. Problem one is, of any possible deworming intervention I could take right now which one should I take? I can take any region on the map of the millions of possible regions and I can deworm there and I can deworm in any amount, in any way, shape or form. Not so plausible the ASL would be true of this problem just because if you throw enough options into a decision problem, you're probably going to get lucky. Certainly ex post can hit a longtermist option and even ex ante. You might get lucky and hit a longtermist option there.

(40:09) But if you're awareness restricted, you ask, for example, of the 5 or 10 international deworming programs that are the most prominent, which one should I fund? I think this is much more like the kinds of questions many donors ask themselves. Here unawareness might be a problem for you because if you’ve only got 5 or 10 options, by the rarity thesis, it's quite possible that none of them could be swamping longtermist options. Here, unlike the cause unrestricted space, we can't just say, well fine, I've got an option for you, search for more options.

(40:39) And the reason is, because the project I just told you about, namely, examining millions of regions on the map and figuring out if I could deworm here or here or here is quite expensive. And if you're talking about something like extinction risk prevention that can bear literally trillions of dollars in capital investment, who cares? But if you're talking about causes where curing the entire problem of worms or malaria would cost in the billions of dollars, then very expensive option generation projects probably wouldn't be worth the cost. It would just be better to take a scattershot approach.

(41:13) So what have we done today?

(41:14) So we started with a version of axiological strong longtermism that just says, in a wide class of decision problems, the ex ante best thing to do is one of a small subset of ex ante best things and that thing is also a swamping longtermist option, so it's long-term effects start to swamp the expectation of the best achievable short-term effects.

(41:34) Now argued as good news is probably a plausible thing to say about decisions like contemporary cause neutral philanthropic decision making and then maybe adjacent decisions like whether or not to go into Global Priorities research.

(41:46) But on the other hand, I gave you two arguments for the rarity thesis. This was the idea that the vast majority of options we confront as decision makers on a daily basis are not swamping longtermist options. I gave you two motivations for this. So first rapid diminution or thin tails was argued for the basis of persistent skepticism and washing out or near symmetry was argued for on the basis of two nearly equivalent arguments, I think, evidential paucity and forecasting skepticism--pessimism. I can't call them both skepticism.

(42:16) And so I used to oppose-- two scope challenges to the scope of ASL. I argued first that when we restrict our attention to many specific cause areas as opposed to an unrestricted question, ASL might become less plausible. And second I argued that we get some pressure on the scope of ASL when we think about awareness constrained decision problems.

(42:35) So I will end there and take some questions. I apologize I haven't seen all of your comments yet but I promise I will look at them first thing during the break.